Bivariate Distributions Pdf

- Bivariate Frequency Distribution Pdf

- Bivariate Binomial Distribution Pdf

- Bivariate Poisson Distribution Pdf

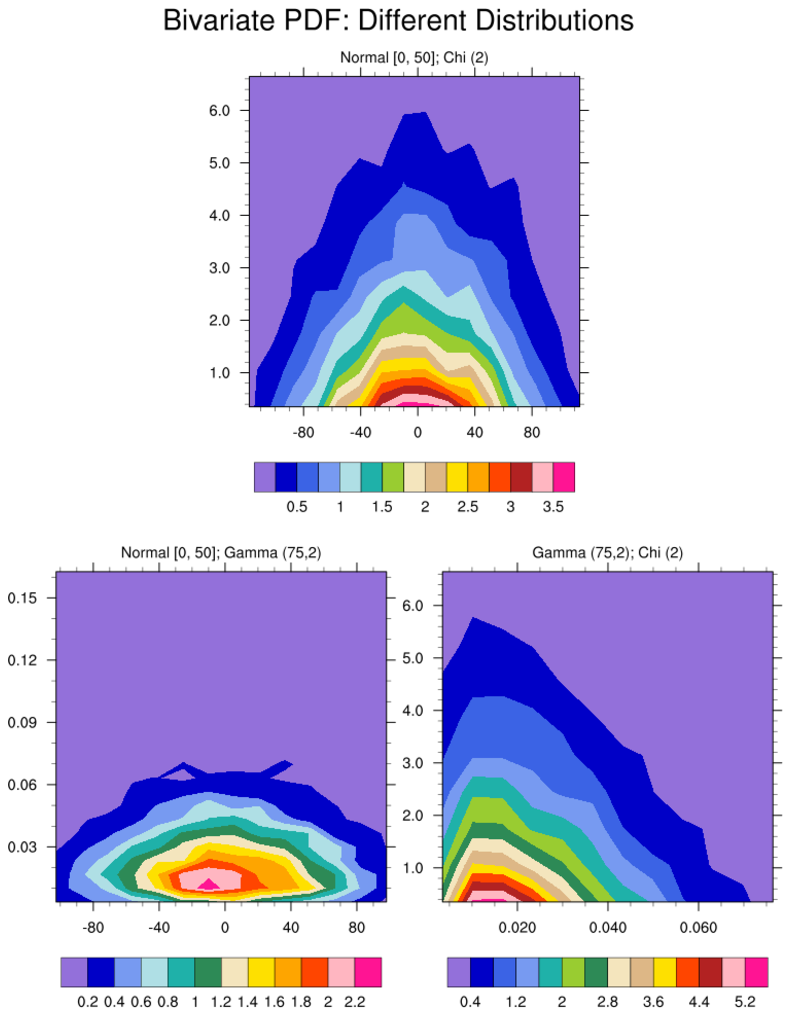

Bivariate Distributions — Continuous Random Variables When there are two continuous random variables, the equivalent of the two-dimensional array is a region of the x–y (cartesian) plane. Above the plane, over the region of interest, is a surface which represents the probability density function associated with a bivariate distribution. Bivariate distribution are the probabilities that a certain event will occur when there are two independent random variables in your scenario. It can be in list form or table form like this. The Bivariate Normal Distribution 3 Thus, the two pairs of random variables (X,Y)and(X,Y) are associated with the same multivariate transform. Since the multivariate transform completely determines the joint PDF, it follows that the pair (X,Y) has the same joint PDF as the pair (X,Y). Since X and Y are independent, X and Y must also. Extend the definition of the conditional probability of events in order to find the conditional probability distribution of a random variable X given that Y has occurred; investigate a particular joint probability distribution, namely the bivariate normal distribution.

| Part of a series on statistics |

| Probability theory |

|---|

Given random variables, that are defined on a probability space, the joint probability distribution for is a probability distribution that gives the probability that each of falls in any particular range or discrete set of values specified for that variable. In the case of only two random variables, this is called a bivariate distribution, but the concept generalizes to any number of random variables, giving a multivariate distribution.

The joint probability distribution can be expressed either in terms of a joint cumulative distribution function or in terms of a joint probability density function (in the case of continuous variables) or joint probability mass function (in the case of discrete variables). These in turn can be used to find two other types of distributions: the marginal distribution giving the probabilities for any one of the variables with no reference to any specific ranges of values for the other variables, and the conditional probability distribution giving the probabilities for any subset of the variables conditional on particular values of the remaining variables.

- 1Examples

- 3Joint density function or mass function

- 4Additional properties

Examples[edit]

Draws from an urn[edit]

Suppose each of two urns contains twice as many red balls as blue balls, and no others, and suppose one ball is randomly selected from each urn, with the two draws independent of each other. Let and be discrete random variables associated with the outcomes of the draw from the first urn and second urn respectively. The probability of drawing a red ball from either of the urns is 2/3, and the probability of drawing a blue ball is 1/3. We can present the joint probability distribution as the following table:

| A=Red | A=Blue | P(B) | |

|---|---|---|---|

| B=Red | (2/3)(2/3)=4/9 | (1/3)(2/3)=2/9 | 4/9+2/9=2/3 |

| B=Blue | (2/3)(1/3)=2/9 | (1/3)(1/3)=1/9 | 2/9+1/9=1/3 |

| P(A) | 4/9+2/9=2/3 | 2/9+1/9=1/3 |

Each of the four inner cells shows the probability of a particular combination of results from the two draws; these probabilities are the joint distribution. In any one cell the probability of a particular combination occurring is (since the draws are independent) the product of the probability of the specified result for A and the probability of the specified result for B. The probabilities in these four cells sum to 1, as it is always true for probability distributions.

Moreover, the final row and the final column give the marginal probability distribution for A and the marginal probability distribution for B respectively. For example, for A the first of these cells gives the sum of the probabilities for A being red, regardless of which possibility for B in the column above the cell occurs, as 2/3. Thus the marginal probability distribution for gives 's probabilities unconditional on , in a margin of the table.

Coin flips[edit]

Consider the flip of two fair coins; let and be discrete random variables associated with the outcomes of the first and second coin flips respectively. Each coin flip is a Bernoulli trial and has a Bernoulli distribution. If a coin displays 'heads' then the associated random variable takes the value 1, and it takes the value 0 otherwise. The probability of each of these outcomes is 1/2, so the marginal (unconditional) density functions are

The joint probability density function of and defines probabilities for each pair of outcomes. All possible outcomes are

Since each outcome is equally likely the joint probability density function becomes

Since the coin flips are independent, the joint probability density function is the productof the marginals:

Roll of a die[edit]

Consider the roll of a fair die and let if the number is even (i.e. 2, 4, or 6) and otherwise. Furthermore, let if the number is prime (i.e. 2, 3, or 5) and otherwise.

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| A | 0 | 1 | 0 | 1 | 0 | 1 |

| B | 0 | 1 | 1 | 0 | 1 | 0 |

Then, the joint distribution of and , expressed as a probability mass function, is

Bivariate Frequency Distribution Pdf

These probabilities necessarily sum to 1, since the probability of some combination of and occurring is 1.

Bivariate normal distribution[edit]

The multivariate normal distribution, which is a continuous distribution, is the most commonly encountered distribution in statistics. When there are specifically two random variables, this is the bivariate normal distribution, shown in the graph, with the possible values of the two variables plotted in two of the dimensions and the value of the density function for any pair of such values plotted in the third dimension. The probability that the two variables together fall in any region of their two dimensions is given by the volume under the density function above that region.

Joint cumulative distribution function[edit]

For a pair of random variables , the joint cumulative distribution function (CDF) is given by[1]:p. 89

(Eq.1) |

where the right-hand side represents the probability that the random variable takes on a value less than or equal to and that takes on a value less than or equal to .

For random variables , the joint CDF is given by

(Eq.2) |

Interpreting the random variables as a random vector yields a shorter notation:

Joint density function or mass function[edit]

Discrete case[edit]

The joint probability mass function of two discrete random variables is:

(Eq.3) |

or written in term of conditional distributions

where is the probability of given that .

The generalization of the preceding two-variable case is the joint probability distribution of discrete random variables which is:

(Eq.4) |

or equivalently

- .

This identity is known as the chain rule of probability.

Since these are probabilities, we have in the two-variable case

which generalizes for discrete random variables to

Continuous case[edit]

The joint probability density function for two continuous random variables is defined as the derivative of the joint cumulative distribution function (see Eq.1):

(Eq.5) |

This is equal to:

where and are the conditional distributions of given and of given respectively, and and are the marginal distributions for and respectively.

Bivariate Binomial Distribution Pdf

The definition extends naturally to more than two random variables:

(Eq.6) |

Again, since these are probability distributions, one has

respectively

Mixed case[edit]

The 'mixed joint density' may be defined where one or more random variables are continuous and the other random variables are discrete. With one variable of each type we have

One example of a situation in which one may wish to find the cumulative distribution of one random variable which is continuous and another random variable which is discrete arises when one wishes to use a logistic regression in predicting the probability of a binary outcome Y conditional on the value of a continuously distributed outcome . One must use the 'mixed' joint density when finding the cumulative distribution of this binary outcome because the input variables were initially defined in such a way that one could not collectively assign it either a probability density function or a probability mass function. Formally, is the probability density function of with respect to the product measure on the respective supports of and . Either of these two decompositions can then be used to recover the joint cumulative distribution function:

The definition generalizes to a mixture of arbitrary numbers of discrete and continuous random variables.

Additional properties[edit]

Joint distribution for independent variables[edit]

In general two random variables and are independent if and only if the joint cumulative distribution function satisfies

Two discrete random variables and are independent if and only if the joint probability mass function satisfies

for all and .

While the number of independent random events grows, the related joint probability value decreases rapidly to zero, according to a negative exponential law.

Creative cam drivers. Create Labs was started a few years later to be the United States division of the company, which was located in Silicon Valley, CA. Creative Labs is a division of Creative Technology, Ltd., which was established in 1981 with its headquarters in Singapore.

Similarly, two absolutely continuous random variables are independent if and only if

for all and . This means that acquiring any information about the value of one or more of the random variables leads to a conditional distribution of any other variable that is identical to its unconditional (marginal) distribution; thus no variable provides any information about any other variable.

Joint distribution for conditionally dependent variables[edit]

If a subset of the variables is conditionally dependent given another subset of these variables, then the probability mass function of the joint distribution is . is equal to . Therefore, it can be efficiently represented by the lower-dimensional probability distributions and . Such conditional independence relations can be represented with a Bayesian network or copula functions.

Important named distributions[edit]

Named joint distributions that arise frequently in statistics include the multivariate normal distribution, the multivariate stable distribution, the multinomial distribution, the negative multinomial distribution, the multivariate hypergeometric distribution, and the elliptical distribution.

See also[edit]

References[edit]

Bivariate Poisson Distribution Pdf

- ^Park,Kun Il (2018). Fundamentals of Probability and Stochastic Processes with Applications to Communications. Springer. ISBN978-3-319-68074-3.

External links[edit]

- Hazewinkel, Michiel, ed. (2001) [1994], 'Joint distribution', Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN978-1-55608-010-4

- Hazewinkel, Michiel, ed. (2001) [1994], 'Multi-dimensional distribution', Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN978-1-55608-010-4

- 'Joint continuous density function'. PlanetMath.